You may use the ecinteractive tool to open interactive sessions with dedicated resources on the HPCF.

Those sessions run as an interactive job in the batch system, and allow you to avoid the the stricter limits on the CPUs, CPU Time or memory you find in standard interactive sessions on the login nodes.

However, note that they will be time constrained and once the job has reached it's time limit they will be closed.

The main features of this ecinteractive tool are the following:

$ ecinteractive -h

Usage : /usr/local/bin/ecinteractive [options] [--]

-d|desktop Submits a vnc job (default is interactive ssh job)

-j|jupyter Submits a jupyter job (default is interactive ssh job)

-J|jupyters Submits a jupyter job with HTTPS support (default is interactive ssh job)

More Options:

-h|help Display this message

-v|version Display script version

-p|platform Platform (default aa. Choices: aa, ab, ac, ad, ecs)

-u|user ECMWF User (default user)

-A|account Project account

-c|cpus Number of CPUs (default 2)

-m|memory Requested Memory (default 8G)

-s|tmpdirsize Requested TMPDIR size (default 3 GB)

-t|time Wall clock limit (default 06:00:00)

-k|kill Cancel any running interactive job

-q|query Check running job

-o|output Output file for the interactive job (default /dev/null)

-x set -x |

You can get an interactive shell running on an allocated node within the Atos HCPF with just calling ecinteractive. By default it will just use the default settings which are:

| Cpus | 1 |

|---|---|

| Memory | 8 GB |

| Time | 6 hours |

| TMPDIR size | 3 GB |

If you need more resources, you may use the ecinteractive options when creating the job. For example, to get a shell with 4 cpus and 16 GB or memory for 12 hours:

[user@aa6-100 ~]$ ecinteractive -c4 -m 16G -t 12:00:00

Submitted batch job 10225018

Waiting 5 seconds for the job to be ready...

Using interactive job:

CLUSTER JOBID STATE EXEC_HOST TIME_LIMIT TIME_LEFT MAX_CPUS MIN_MEMORY TRES_PER_NODE

aa 10225018 RUNNING aa6-104 12:00:00 11:59:55 4 16G ssdtmp:3G

To cancel the job:

/usr/local/bin/ecinteractive -k

Last login: Mon Dec 13 09:39:09 2021

[ECMWF-INFO-z_ecmwf_local.sh] /usr/bin/bash INTERACTIVE on aa6-104 at 20211213_093914.794, PID: 1736962, JOBID: 10225018

[ECMWF-INFO-z_ecmwf_local.sh] $SCRATCH=/ec/res4/scratch/user

[ECMWF-INFO-z_ecmwf_local.sh] $PERM=/ec/res4/perm/user

[ECMWF-INFO-z_ecmwf_local.sh] $HPCPERM=/ec/res4/hpcperm/user

[ECMWF-INFO-z_ecmwf_local.sh] $TMPDIR=/etc/ecmwf/ssd/ssd1/tmpdirs/user.10225018

[ECMWF-INFO-z_ecmwf_local.sh] $SCRATCHDIR=/ec/res4/scratchdir/user/8/10225018

[ECMWF-INFO-z_ecmwf_local.sh] $_EC_ORIG_TMPDIR=N/A

[ECMWF-INFO-z_ecmwf_local.sh] $_EC_ORIG_SCRATCHDIR=N/A

[ECMWF-INFO-z_ecmwf_local.sh] Job 10225018 time left: 11:59:54

[user@aa6-104 ~]$ |

If you log out, the job continues to run until explicietly cancelled or reaching the time limit. |

Once you have an interactive job running, you may reattach to it, or open several shells within that job calling ecinteractive again.

If you have a job already running, ecinteractive will always attach you to that one regardless of the resources options you pass. If you wish to run a job with different settings, you will have to cancel it first |

[user@aa6-100 ~]$ ecinteractive

Using interactive job:

CLUSTER JOBID STATE EXEC_HOST TIME_LIMIT TIME_LEFT MAX_CPUS MIN_MEMORY TRES_PER_NODE

aa 10225018 RUNNING aa6-104 12:00:00 11:57:56 4 16G ssdtmp:3G

WARNING: Your existing job 10225018 may have a different setup than requested. Cancel the existing job and rerun if you with to run with different setup

To cancel the job:

/usr/local/bin/ecinteractive -k

Last login: Mon Dec 13 09:39:14 2021 from aa6-100.bullx

[ECMWF-INFO-z_ecmwf_local.sh] /usr/bin/bash INTERACTIVE on aa6-104 at 20211213_094114.197, PID: 1742608, JOBID: 10225018

[ECMWF-INFO-z_ecmwf_local.sh] $SCRATCH=/ec/res4/scratch/user

[ECMWF-INFO-z_ecmwf_local.sh] $PERM=/ec/res4/perm/user

[ECMWF-INFO-z_ecmwf_local.sh] $HPCPERM=/ec/res4/hpcperm/user

[ECMWF-INFO-z_ecmwf_local.sh] $TMPDIR=/etc/ecmwf/ssd/ssd1/tmpdirs/user.10225018

[ECMWF-INFO-z_ecmwf_local.sh] $SCRATCHDIR=/ec/res4/scratchdir/user/8/10225018

[ECMWF-INFO-z_ecmwf_local.sh] $_EC_ORIG_TMPDIR=N/A

[ECMWF-INFO-z_ecmwf_local.sh] $_EC_ORIG_SCRATCHDIR=N/A

[ECMWF-INFO-z_ecmwf_local.sh] Job 10225018 time left: 11:57:54

[user@aa6-104 ~]$ |

You may query ecinteractive for existing interactive jobs, and you can do so from within or outside the job. It may be useful to see how much time is left

[user@aa6-100 ~]$ ecinteractive -q CLUSTER JOBID STATE EXEC_HOST TIME_LIMIT TIME_LEFT MAX_CPUS MIN_MEMORY TRES_PER_NODE aa 10225018 RUNNING aa6-104 12:00:00 11:55:40 4 16G ssdtmp:3G |

Logging out of your interactive shells spawn through ecinteractive will not cancel the job. If you have finished working with it, you should cancel it with:

[user@aa6-100 ~]$ ecinteractive -k cancelling job 10225018... CLUSTER JOBID STATE EXEC_HOST TIME_LIMIT TIME_LEFT MAX_CPUS MIN_MEMORY TRES_PER_NODE aa 10225018 RUNNING aa6-104 12:00:00 11:55:34 4 16G ssdtmp:3G Cancel job_id=10225018 name=user-ecinteractive partition=inter [y/n]? y Connection to aa-login closed. |

if you need to run graphical applications, you can do so through the standard x11 forwarding.

Alternatively, you may use ecinteractive to open a basic window manager running on the allocated interactive node, which will open a VNC client on your end to connect to the running desktop in the allocated node:

[user@aa6-100 ~]$ ecinteractive -d

Submitted batch job 10225277

Waiting 5 seconds for the job to be ready...

Using interactive job:

CLUSTER JOBID STATE EXEC_HOST TIME_LIMIT TIME_LEFT MAX_CPUS MIN_MEMORY TRES_PER_NODE

aa 10225277 RUNNING aa6-104 6:00:00 5:59:55 2 8G ssdtmp:3G

To cancel the job:

/usr/local/bin/ecinteractive -k

Attaching to vnc session...

To manually re-attach:

vncviewer -passwd ~/.vnc/passwd aa6-104:9598 |

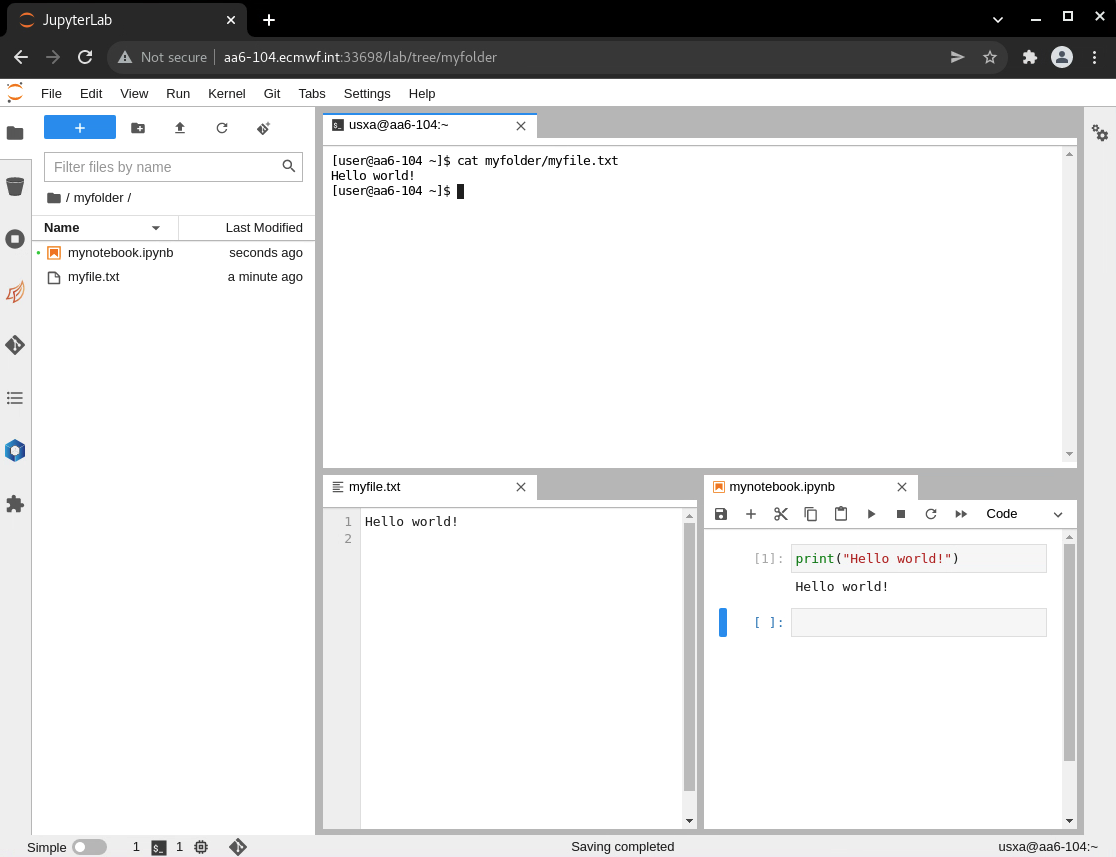

You can also use ecinteractive to open up a Jupyter Lab instance on the HPCF. The application would effectively run on the allocated node for the job, and would allow you to conveniently interact with it from your browser. When running from VDI or your end user device, ecinteractive will try to open it in a new tab automatically. Alternatively you may manually open the URL provided to connect to your Jupyter Lab session.

[user@aa6-100 ~]$ ecinteractive -j

Using interactive job:

CLUSTER JOBID STATE EXEC_HOST TIME_LIMIT TIME_LEFT MAX_CPUS MIN_MEMORY TRES_PER_NODE

aa 10225277 RUNNING aa6-104 6:00:00 5:58:07 2 8G ssdtmp:3G

To cancel the job:

/usr/local/bin/ecinteractive -k

Attaching to Jupyterlab session...

To manually re-attach go to http://aa6-104.ecmwf.int:33698/?token=b1624da17308654986b1fd66ef82b9274401ea8982f3b747 |

To use your own conda environment as a kernel for Jupyter notebook you will need to have ipykernel installed in the conda environment before starting ecinteractive job. ipykernel can be installed with:

[user@aa6-100 ~]$ conda activate {myEnv}

[user@aa6-100 ~]$ conda install ipykernel

[user@aa6-100 ~]$ python3 -m ipykernel install --user --name={myEnv} |

The same is true if you want to make your own Python virtual environment visible in Jupyterlab

[user@aa6-100 ~]$ source {myEnv}/bin/activate

[user@aa6-100 ~]$ pip3 install ipykernel

[user@aa6-100 ~]$ python3 -m ipykernel install --user --name={myEnv} |

To remove your personal kernels from Jupyterlab once you don't need them anymore, you could do so with:

jupyter kernelspec uninstall {myEnv} |

If you wish to run Juptyer Lab on HTTPS instead of plain HTTP, you may use the -J option in ecinteractive. In that case, a personal SSL certificate would be created under ~/.ssl the first time, and would be used to encrypt the HTTP traffic between your browser and the compute node.

In order to avoid browser security warnings, you may fetch the ~/.ssl/selfCA.crt certificate from the HPCF and import it into your browser as a trusted Certificate Authority. This is only needed once.