Check the initial conditions

The initial conditions are available under the master (troifs0) training account. You do not need to copy them, but you can check the content of the folder as well as the files.

troifs1@cca-login4:/perm/ectrain/troifs1> ls -ltr /perm/ectrain/troifs0/user-cray/oifs40r1/data/input/

File name |

|

Description |

Size |

2015120300/ICMCLgs0cINIT |

: |

input file containing surface and soil information (albedo, soil temperature etc.) |

9.3 MB |

2015120300/ICMGGgs0cINIT |

: |

input file containing gridpoint surface initial data |

7 MB |

2015120300/ICMSHgs0cINIT |

: |

input file containing initial data for the prognostic variables in spectral representation |

35 MB |

2015120300/ICMGGgs0cINIUA |

: |

input file containing initial data for the prognostic variables in gridpoint representation |

101 MB |

2015120300/wam_grid_tables |

: |

model grid and tables for the wave model |

52 MB |

2015120300/wam_subgrid_0 |

: |

information for model advection, including sub-grid parametrisation for the wave model |

12 MB |

2015120300/wam_subgrid_1 |

: |

information for model advection, including sub-grid parametrisation for the wave model |

25 MB |

2015120300/wam_subgrid_2 |

: |

information for model advection, including sub-grid parametrisation for the wave model |

25 MB |

2015120300/cdwavein |

: |

initial value of drag coefficient for the wave model |

63 KB |

2015120300/specwavein |

: |

initial wave spectra for the wave model |

7.6 MB |

2015120300/uwavein |

: |

initial value of wind speed for the wave model |

63 KB |

2015120300/sfcwindin |

: |

initial value of 10-metre horizontal wind components and sea ice fraction for the wave model |

2.2 MB |

The initial conditions are GRIB files, you can read their content using ecCodes commands, e.g.:

troifs1@cca-login4:/perm/ectrain/troifs1> grib_ls /perm/ectrain/troifs0/user-cray/oifs40r1/data/input/ICMSHgs0cINIT

Edit the namelist

The namelist files control the necessary experiment settings (e.g., time step, experiment ID) as well as the post-processing. The namelists are in the nam directory, go there and open the namelistfc file using a text editor, e.g.:

troifs1@cca-login4:/perm/ectrain/troifs1> cd oifs40r1/nam troifs1@cca-login4:/perm/ectrain/troifs1/oifs40r1/nam> gvim namelistfc

The most important namelist elements are listed below with their explanation:

&NAMDYN

! Name of the namelist group

TSTEP=2700.0, ! Time step in seconds

/

! End of the namelist group

&NAMFPG NFPLEV=91, ! Number of vertical levels

NFPMAX=255, !

Spectral truncation /

&NAMCT0 CNMEXP="gs0c", ! Experiment ID

NFRPOS=4, ! Output frequency of the 'history' files

in time steps (i.e. here 3 hours)

NFRHIS=4,

!

Output frequency of the model variables

in time steps (i.e. here 3 hours) /

&NAMFPC

! Pressure level outputs: number of fields (NFP3DFP), GRIB field codes (MFP3DFP) and pressure levels in Pascals (RFP3P) NFP3DFP=9, MFP3DFP(:)=129,130,135,138,155,157,133,131,132,

RFP3P(:)=100000.0,92500.0,85000.0,70000.0,50000.0,40000.0,30000.0,25000.0,20000.0,15000.0,10000.0,7000.0,5000.0,3000.0,2000.0,1000.0,700.0,500.0,300.0,200.0,100.0,

! Saving spectral orography (geopotential), surface

pressure (logarithm of surface pressure) needed for post-processing

NFP2DF=2,

MFP2DF(:)=129,152,

! Physics output: number of fields (NFPPHY) and GRIB field codes (MFPPHY)

NFPPHY=89,

MFPPHY(:)=31,32,33,34,35,36,37,38,39,40,41,42,44,45,49,50,57,58,59,78,79,129,136,137,139,141,142,143,144,145,146,147,148,151,159,164,165,166,167,168,169,170,172,175,176,177,178,179,180,181,182,183,186,187,188,189,195,196,197,198,201,202,205,206,208,209,210,211,235,236,238,243,244,245,229,230,231,232,213,212,8,9,228089,228090,228001,260121,260123,228129,228130,

/

&NAMFPD

NLAT=256,

! Number of latitudes on the corresponding Gaussian grid

NLON=512,

! Number of longitudes on the corresponding Gaussian grid

/

In the evaluation, we will investigate the following variables:

- mean sea level pressure: its GRIB code number is 151;

- 2-metre temperature: its code number is 167;

- precipitation: it is composed from large-scale and convective precipitation with code numbers 142 and 143, respectively;

- 10-metre wind gust: its code number is 49;

- temperature at 850 hPa level: its code number is 130;

- relative humidity at 700 hPa level: its code number is 157;

- geopotential at 500 hPa level: its code number is 129;

u and v wind components at 250 and 100 hPa with code numbers 131 and 132, respectively.

These variables have to be included in the namelist among the post-processing variables (see the code numbers and levels highlighted with bold characters in the box above). More information about the namelist settings and GRIB field codes of different parameters can be found in the OpenIFS how-to articles: How to control OpenIFS output.

Submit the experiment

The experiment will be executed by submission of the job_desmond file.

We submit the job with the qsub command:

troifs1@cca-login4:/perm/ectrain/troifs1/oifs40r1/nam> cd ../job troifs1@cca-login4:/perm/ectrain/troifs1/oifs40r1/job> qsub job_desmond

After the job is submitted, you can check its status with the qstat command:

troifs1@cca-login4:/perm/ectrain/troifs1/oifs40r1/job> qstat -u $USER

ccapar:

Req'd Req'd Elap

Job ID Username Queue Jobname SessID NDS TSK Memory Time S Time

--------------- -------- -------- ---------- ------ --- --- ------ ----- - -----

1875659.ccapar troifs1 np troifs1 50171 3 72 241gb 30:00 R 00:10

if you see 'R' under 'S' in the output message, it means that your job is running. If it is in 'Q' status, it is still queueing. If you have not received any message, it means either your job successfully finished or failed.

When OpenIFS starts to run, you will see the following content in your working directory:

master.exe fort.4 # executable and namelist wam_namelist wam_namelist_coupled_000 # namelists for the wave model ICMGGgs0cINIUA ICMCLgs0cINIT ICMSHgs0cINIT ICMGGgs0cINIT # initial conditions cdwavein sfcwindin specwavein uwavein # wave model initial conditions wam_grid_tables wam_subgrid_0 wam_subgrid_1 wam_subgrid_2 # wave model files ifsdata rtables 255l_2 # climate data ncf927 ifs.stat NODE.001_01 # text output (log) files out err # output and error files from the model run ICMGGgs0c+000000 ICMSHgs0c+000000 ICMGGgs0c+000003 ICMSHgs0c+000003 # output files for the first two steps

The running time of the job is around 10 minutes, the outputs (i.e. the ICM* files) are continuously generated. If your job failed, you find more information about the reason in the NODE.001_01 and the err files. We suggest starting their inspection at the end of the file.

For more instructions and details about running the model, please visit the related page in the OpenIFS User Guides.

Post-processing the model outputs

After the job run is completed, the outputs are split into 2 files (located in the working directory): ICMGG* files are for the gridpoint fields and ICMSH* files are for the spectral ones. The two kinds of files should include all the necessary variables set in the namelist. However, before visualization of the results, some steps are still needed. The Metview macros prepared for visualization of experiment results require the meteorological variables in GRIB format separated by variables and days with the appropriate names.

Go to the folder where your output files are saved. The 2-metre temperature, the precipitation, the mean sea level pressure and the wind gust are expected in gridpoint representation. They are prepared from the ICMGG* outputs with the next operations:

troifs1@cca-login4:/perm/ectrain/troifs1/oifs40r1/job> cd ../data/output/ troifs1@cca-login4:/perm/.../output> expID=gs0c; date=20151204 troifs1@cca-login4:/perm/.../output> steps="00 03 06 09 12 15 18 21 24 27 30 33 36 39 42 45 48 51 54" # every 3 hours from 0 UTC on 4 December to 0 UTC on 6 December troifs1@cca-login4:/perm/.../output> for step in ${steps} do grib_copy -w shortName=2t ICMGG${expID}+0000${step} t2_${date}_${step}.grib #to get the 2-metre temperature grib_copy -w shortName=msl ICMGG${expID}+0000${step} mslp_${date}_${step}.grib #to get the mean sea level pressure grib_copy -w shortName=10fg ICMGG${expID}+0000${step} gust_${date}_${step}.grib #to get the 10-metre wind gust donePlease do not forget to press Enter after the last command.

For precipitation, both the convective and large-scale precipitation components have to be gathered in the same file:

troifs1@cca-login4:/perm/.../output> expID=gs0c; date=20151204 troifs1@cca-login4:/perm/.../output> steps="00 03 06 09 12 15 18 21 24 27 30 33 36 39 42 45 48 51 54" # every 3 hours from 0 UTC on 4 December to 0 UTC on 6 December troifs1@cca-login4:/perm/.../output> for step in ${steps} do grib_copy -w shortName=lsp/cp ICMGG${expID}+0000${step} p_${date}_${step}.grib doneThe pressure level data are required in spectral representation. They are prepared from the ICMSH* outputs with the next operations:

troifs1@cca-login4:/perm/.../output> expID=gs0c; date=20151204 troifs1@cca-login4:/perm/.../output> steps="00 03 06 09 12 15 18 21 24 27 30 33 36 39 42 45 48 51 54" # every 3 hours from 0 UTC on 4 December to 0 UTC on 6 December troifs1@cca-login4:/perm/.../output> for step in ${steps} do grib_copy -w shortName=t,level=850 ICMSH${expID}+0000${step} t850_${date}_${step}.grib #to get the temperature at 850 hPa grib_copy -w shortName=r,level=700 ICMSH${expID}+0000${step} q700_${date}_${step}.grib #to get the relative humidity at 700 hPa grib_copy -w shortName=z,level=500 ICMSH${expID}+0000${step} z500_${date}_${step}.grib #to get the geopotential at 500 hPa doneFor wind, both the u and v components have to be collected in the same file:

troifs1@cca-login4:/perm/.../output> expID=gs0c; date=20151204 troifs1@cca-login4:/perm/.../output> steps="00 03 06 09 12 15 18 21 24 27 30 33 36 39 42 45 48 51 54" # every 3 hours from 0 UTC on 4 December to 0 UTC on 6 December troifs1@cca-login4:/perm/.../output> for step in ${steps} do grib_copy -w shortName=u/v,level=250 ICMSH${expID}+0000${step} u250_${date}_${step}.grib #to get the u and v components at 250 hPa grib_copy -w shortName=u/v,level=100 ICMSH${expID}+0000${step} u100_${date}_${step}.grib #to get the u and v components at 100 hPa doneAfter these operations, the timesteps belonging to the same days have to be merged into a common file and the concatenated file has to be moved to the input directory of the Metview visualization:

troifs1@cca-login4:/perm/.../output> expID=gs0c; date=20151204 troifs1@cca-login4:/perm/.../output> variables="t2 mslp gust p t850 q700 z500 u250 u100" troifs1@cca-login4:/perm/.../output> for variable in ${variables} do cat ${variable}_${date}_*.grib > ${variable}_${date}.grib doneIf you are confident that all the necessary files are generated, you can remove the temporary files from the directory:

troifs1@cca-login4:/perm/.../output> expID=gs0c; date=20151204 troifs1@cca-login4:/perm/.../output> variables="t2 mslp gust p t850 q700 z500 u250 u100" troifs1@cca-login4:/perm/.../output> for variable in ${variables} do /bin/rm ${variable}_${date}_*.grib doneAt the end you have to have the following files:

troifs1@cca-login4:/perm/.../output> ls *grib gust_20151204.grib # wind gust p_20151204.grib # precipitation t2_20151204.grib # 2-metre temperature mslp_20151204.grib # mean sea level pressure q700_20151204.grib # 700 hPa relative humidity t850_20151204.grib # 850 hPa temperature u100_20151204.grib # 100 hPa wind u250_20151204.grib # 250 hPa wind z500_20151204.grib # 500 hPa wind

Create directory structure for visualization on the cluster of the University of Reading

Open a terminal window on the cluster of the University of Reading (please do not forget to login with ssh -X username@racc.rdg.ac.uk). The tutorial explains the operations on the cluster using the tswx18101 account, you should replace it with your own user account. Let us assume that the current directory is the ${HOME} directory. The necessary folders are automatically created by the next command:

[swx18101@racc-login-0-4 ~] . /home/users/swx18100/Monday_training/Desmond_casestudy/create_environment

Please note that this command can be started from any directory and in this case you should not change the swx18100 user ID in the path.

This command creates the following structure in your home directory:

[swx18101@racc-login-0-4 ~] cd [swx18101@racc-login-0-4 ~] find . . ./Monday_trainig # Directory for training exercises on Monday ./Monday_trainig/Desmond_casestudy # Directory for exercise with Desmond case study ./Monday_trainig/Desmond_casestudy/data # Directory for input data ./Monday_trainig/Desmond_casestudy/data/forecast ./Monday_trainig/Desmond_casestudy/data/forecast/gs0c ./Monday_trainig/Desmond_casestudy/data/reference ./Monday_trainig/Desmond_casestudy/macros # Directory for Metview macros ./Monday_trainig/Desmond_casestudy/macros/plot_forecastrun.mv ./Monday_trainig/Desmond_casestudy/macros/plot_ERA5.mv ./Monday_trainig/Desmond_casestudy/figures # Directory for figures to save ./Monday_trainig/Desmond_casestudy/definitions # Directory for some visual definitions ./Monday_trainig/Desmond_casestudy/definitions/base_title.mv ./Monday_trainig/Desmond_casestudy/definitions/base_legend.mv ./Monday_trainig/Desmond_casestudy/definitions/base_layout.mv ./Monday_trainig/Desmond_casestudy/definitions/visdef ./Monday_trainig/Desmond_casestudy/definitions/diff_range ./metview/Desmond_casestudy # Link to casestudy folder in metview directory

Transfer the forecast data to the cluster of the University of Reading

Navigate to the directory prepared for the forecast data in the folder of the Desmond casestudy:

[swx18101@racc-login-0-4 ~]$ cd Monday_training/Desmond_casestudy/data/forecast/gs0c [swx18101@racc-login-0-4 gs0c]$ sftp -r troifs1@ecaccess.ecmwf.int cd ECHOST/cca/perm/ectrain/troifs1/oifs40r1/data/output mget *grib bye

Download the re-analysis data

As reference data, we use ERA5 re-analyses. ERA5 datasets are public and available in the ECMWF MARS (Meteorological Archival and Retrieval System). Re-analyses are created by optimal combination of available observational information and short-range numerical weather predictions using data assimilation techniques. They provide the most comprehensive description of the past and current states of the 3-dimensional atmosphere or the Earth system.

ERA5 (Hersbach and Dee, 2016; Hersbach et al., 2018) is being constructed on higher, 32 km horizontal resolution with 137 vertical levels from 1950. Analysis fields are being prepared hourly with inclusion of newly reprocessed observational data, using the 4D-Var data assimilation technique and the IFS cycle 41r2 model version (operational in 2016). ERA5 forecasts initialized from the analyses at 6 and 18 UTC produce hourly outputs up to 18 hours and give an estimation of forecast uncertainty. There is an important difference between ERA-Interim and ERA5 in handling of the accumulated parameters: in ERA5 the accumulation is calculated from the previous post-processing step (i.e., along one hour), while in ERA-Interim it is from the beginning of the forecast – this feature is relevant in evaluation of the precipitation amount and wind gust. (More information about the characteristics of ERA5 can be found in the Copernicus Knowledge Base: What are the changes from ERA-Interim to ERA5?)

The data are provided on the ECMWF download server in the proper format needed for visualization with Metview or they can be downloaded in their original format from the ECMWF MARS system. This retrieval has to be accomplished only once, if we download the data for the whole period of the case study. Steps needed to download the re-analysis GRIB files from the download server into the input directory of the Metview visualization are:

[swx18101@racc-login-0-4 Desmond_gs0c]$ cd ../../reference [swx18101@racc-login-0-4 Desmond_gs0c]$ wget -c http://download.ecmwf.int/test-data/openifs/reference_casestudies/data/Desmond_201512/reference/ea_20151201-20151206.tar.gz

Uncompress the .tar.gz file:

[swx18101@racc-login-0-4 Desmond_gs0c]$ tar -zxvf ea_20151201-20151206.tar.gz

Plotting

Start Metview with typing metview and navigate to the Desmond_casestudy folder. In the macros directory you will see two macros: plot_forecastrun.mv is to visualize the results of the experiment, plot_ERA5.mv is to visualize ERA5 re-analysis data.

Forecast maps

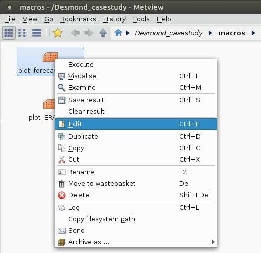

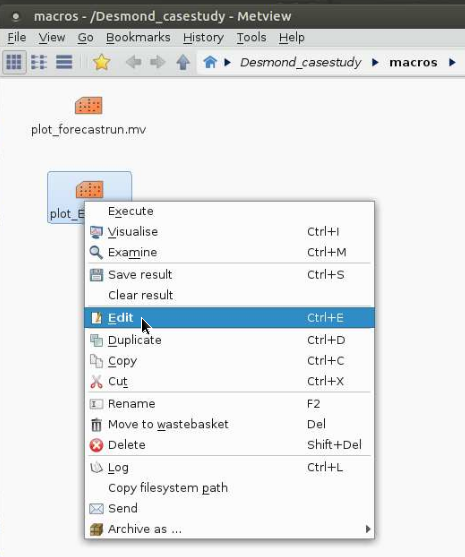

With right click on the macro and selecting Edit from the menu:

a window pops up in which you can edit some parameters as meterorological variables to plot, geographical area, verification date:

After saving the modifications, the macro can be executed either from the macro window clicking on the blue arrow () in the top bar or with right click on the macro and selecting the Execute from the menu:

Figures can be saved selecting the Export menu point from the File menu. The timesteps are saved as individual pages in a multi-page .ps file.

Re-analysis maps

With right click on the macro and selecting Edit from the menu:

a window pops up in which you can edit some parameters as meteorological variables to plot, geographical area, verification date. Please note that you can choose less variables here:

Figures can be saved in the same way as before. The timesteps are saved as individual pages in a multi-page .ps file.

At the end, you can compare your results (i.e. your plots) with the reference figures which are available on the ECMWF download server.Troubleshooting

Here some cases are collected, when the model run and the forecast evaluation can terminate with error.

Model run

- The initial conditions are prepared for fix dates and spatial resolutions. Make sure that your settings coincide with that.

- The initial conditions are prepared with fix experiment ID that you can identify from the file names (e.g., in ICMGGgs0cINIT experiment ID is gs0c). Please make sure that you use the proper expID in the job and the namelist, too.

- Further possible issues regarding running the OpenIFS and their solution are listed in the OpenIFS FAQ.

Visualization with Metview

- Metview macros can stop with error message if not the recommended software versions are used. The supported (minimum) versions are GRIB-API 1.18.0, ecCodes 2.5.0 and Metview 4.7.1.

Metview macros stops with error if the include statements in the .mv files are not set properly:

base_visdef: No such file or directory macro - ERROR - 20180611.144342 - Line 151: Cannot include file metview: EXIT on ERROR (line 1), exit status 1, starting 'cleanup'

- Metview macros partly require mandatory directory structure. The folder of the input data can be arbitrary, however, under this directory macros expect the re-analysis fields to be placed in the subdirectory named reference, while the forecast data in the subdirectory identified with the 4-digit experiment ID (i.e., data/reference and data/forecast/gs0c etc.). Please make sure that the input data are stored in these folders with the proper file names (more information about the requested file names can be found here).

References

Hersbach, H., de Rosnay, P., Bell, B., Schepers, D., Simmons, A., Soci, C., Abdalla, S., Alonso-Balmaseda, A., Balsamo, G., Bechtold, P., Berrisford, P., Bidlot, J-R., de Boisséson, E., Bonavita, M., Browne, P., Buizza, R., Dahlgren, P., Dee, D., Dragani, R., Diamantakis, M., Flemming, J., Forbes, R., Geer, A., Haiden, T., Hólm, E., Haimberger, L., Hogan, R., Horányi, A., Janisková, M., Laloyaux, P., Lopez, P., Muñoz-Sabater, J., Peubey, C., Radu, R., Richardson, D., Thépaut, J-N., Vitart, F., Yang, X., Zsótér, E., Zuo, H., 2018: Operational global reanalysis: progress, future directions and synergies with NWP. ECMWF ERA Report Series 27.

Hersbach, H., Dee, D.P., 2016: ERA5 reanalysis is in production. ECMWF Newsletter 147, p. 7.

Szépszó, G., Carver, G., 2018: New forecast evaluation tool for OpenIFS. ECMWF Newsletter 156, 14–15.