Access to GPUs is not enabled by default for all tenants. Please raise an issue to the support portal or contact support@europeanweather.cloud to request access if you wish to use them.

The current pilot infrastructure at ECMWF features 2x5 NVIDIA Tesla V100 cards targeting Machine Learning workloads. They are exposed as Virtual GPUS to the instances on the cloud, which allows for multiple VMs to transparently share the same Physical GPU card.

How to provision a GPU-enabled instance

Once your tenant is granted access to the GPUs, creating a new VM with access to a virtual GPU is very straightforward. Follow the process on Provision a new instance - web paying special attention on the Configuration Step:

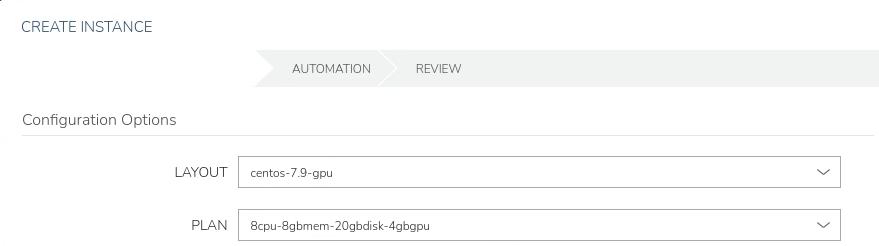

CentOS

- On the Library screen, choose CentOS.

- On Layout, select the item with "-gpu" suffix (e.g.: "centos-7.9-gpu" )

- On Plan, pick one of the plans with the "gpu" suffix, depending on how much resources are needed, including the amount of GPU memory:

- 8cpu-4gbmem-20gbdisk-4gbgpu

- 8cpu-8gbmem-20gbdisk-4gbgpu

- 8cpu-32gbmem-40gbdisk-8gbgpu

- 16cpu-32gbmem-80gbdisk-16gbgpu

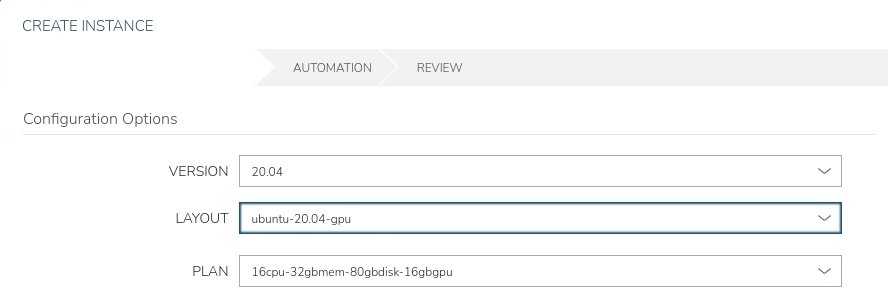

Ubuntu

- On the Library screen, choose Ubuntu.

- On Layout, select the item with "-gpu" suffix (e.g.: "ubuntu-20.04-gpu" )

- On Plan, for the Ubuntu instance select the following plan : 16cpu-32gbmem-80gbdisk-16gbgpu ( * )

( * ) Note: the "16cpu-32gbmem-80gbdisk-16gbgpu" plan has limited availability in the current EWC infrastructure. Please contact EWC team via Support Portal if you encounter any problem during the deployment as it might be caused by the temporary lack of availability.

Once your instance is running, you can check wether your instance can see the GPU with:

$> nvidia-smi

Wed Mar 29 09:17:13 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 450.156.00 Driver Version: 450.156.00 CUDA Version: 11.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 GRID V100-16C On | 00000000:00:05.0 Off | 0 |

| N/A N/A P0 N/A / N/A | 1168MiB / 16384MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+